We recently had a discussion on the SEC call about JSON Schema. I was asked to write down the current state and to get the discussion going on how to progress. So, here it is:

Several options exist to define a JSON format. Two of the most often used are:

- JSON Schema

- OpenAPI

Tools for working with Json Schema are widespread. It is very suitable for validation purposes, including built-in support in text-editors. However, with OpenEHRs extensive use of polymorphism, it is not suited for code generation.

OpenAPI is an extension of JSON Schema. The latest version is fully compatible with JSON Schema, but it still contains extensions. To make it compatible, they defined the extensions as a Json Schema dialect, with added vocabularies, which is possible in json schema. It requires OpenAPI tooling to process, json schema based tooling works, but it will not be complete.

One of the extensions defined in OpenAPI is a feature to specify a discriminator column, which means it is possible to use it to generate code for models using polymorphism. Its use cases include API specification, validation, code generation and API specification. If we include it in the REST Api specification, it is possible to generate code for an OpenEHR REST API client in many languages, including all archetype and RM models. Also it is possible to generate documentation for these APIs in many formats, and to plug this definition into tooling to manually try an API.

Current state of JSON Schema

We currently have two JSON Schema files:

- The official one in GitHub - openEHR/specifications-ITS-JSON: JSON-Schema Specifications

- The one generated from BMM in Archie, at RM 1.1.0 json schema.json · GitHub

specifications-ITS-JSON

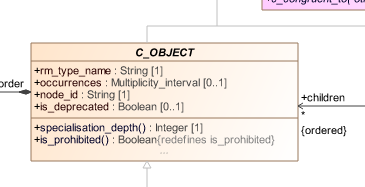

The schema in specifications-ITS-JSON is generated by Sebastian Iancu from the UML models. The code to generate this is closed source, and not available to others. It has the following

benefits:

- very extensive, including all documentation about models, including which functions are defined

- it has a well organised structure, with separate files in packages

And the following drawbacks:

- it cannot be used for validation, because it does not implement the OpenEHR polymorphism including the _type column to discriminate types. If it encounters a CLUSTER in a place where the model says ITEM, the items attribute of the cluster plus any of its content will not be checked by the validator at all.

- no root nodes are specified

- it cannot be hand edited because it is so big, and all information is duplicated in several files, and the code to generate it is not released

- slower to parse because it has many extra fields.

The Archie generated JSON Schema

The Archie version is autogenerated from BMM. The code to do so is available as part of Archie, at archie/JSONSchemaCreator.java at master · openEHR/archie · GitHub. It has the following

benefits:

- can be used to validate, including all polymorphism except some generics

- tuned for speed of validation and quality of validation messages

- extensively tested against the Archie json mapping for the RM, even with additionalProperties: false set everywhere to test for completeness.

- the code to generate it is open source

- contains no extra information, so fast to parse.

drawbacks:

- not currently separated in packages or files, just one file, but the information to do so is present in the BMM models.

- nearly no documentation included, because the BMM files contain very little documentation.

Proposal for JSON Schema

We probably need a standard JSON Schema. It can be (a next iteration of) the Archie JSON Schema, or a next iteration of the current specifications-ITS-JSON. To switch to the Archie JSON schema, we have to decide if the current form is good enough, or if it needs a couple more improvements, for exampled in the form of documentation or splitting in a different package structure. It will also need to be tested against other implementations - the current one works with Archie and EHRBase, but it has not been tested against other implementations yet.

To keep the current schema, we will need to adjust it so it contains the constraints for polymorphism, and it will need to be extensively tested. We also need to decide on whether it is acceptable if this json schema can only be generated with unreleased tooling, or if we want an open variant to generate this.

Opinions?

OpenAPI

There is only one current OpenEHR OpenAPI model that I know of, both for archetypes and the RM model. It is generated from BMM. For the AOM, a BMM is first generated from the Archie implementation, because no model is available.The code is open source at First version of a working open API model generator by pieterbos · Pull Request #180 · openEHR/archie · GitHub and the output including a demo of how to use it for code generation is available at GitHub - nedap/openehr-openapi: An example project to show how OpenAPI can work with OpenEHR

The current model works to validate and to generate code and human readable documentation. However, it has the following problems:

- code to generate the files is still in a branch in Archie, needs to be updated, reviewed and merged

- one file, not structured in packages

- nearly no documentation from the models, will have to be added to the BMM files

- it contains only the models, no REST API definition yet.

I think it would be good to do further work on it, by creating an OpenAPI definition of the OpenEHR Rest API, referencing the autogenerated models. This would mean clients could be autogenerated, rather than having to rely on someone hand-coding a library such as Archie. The generated models can be referenced in such an API easily, and it is possible to mandate fields as mandatory for specific APIs from within the API definition, not changing the schema at all. It would also be easier to test whether a given implementation conforms to the OpenEHR models.

Again, opinions?