Following a discussion in the SEC yesterday. Where there was agreements on all the outstanding issues for the migration of the community to ADL2.4. (Huge success for the community! proving a willingness to work together on difficult topics and to make significant compromises/investments for a shared future). A discussion came up what the migration to ADL2.4 will mean for all the different ADL artefacts and its formats/serialisation. This will become important during the migrations of the different software components in the ecosystem (ADL editing, CKM, CDR). It’s quite a niche topic so mostly relevant to the SEC and implementors.

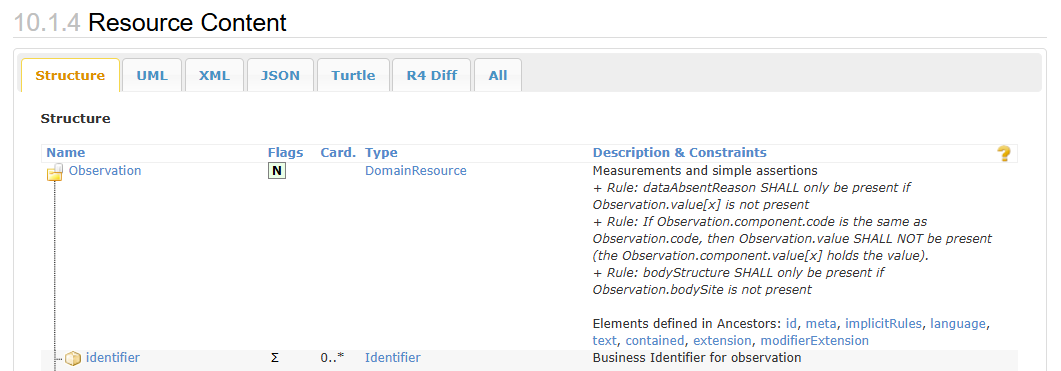

Currently there are many different data format and

serialisations: ADL, json, XML, ODIN

file extensions: .opt .opt2 .adl. adls. adlt .json .oet

artefacts 1.4: archetype (adl, json, xml), adl1.4 template (oet, Better’s native json), adl1.4 operational template (.opt) Better’s web template (json?) SDT, TDD, and probably others.

artefacts 2.4 (unchanged from [2.0-2.4]): adls (default differential archetype), adl (flattened archetype), adlt (differential template, technically identical to adls, Nedap invented file extension), opt2 (unspecified).

In my mind there will be two main artefacts formalism/serialisation in the future:

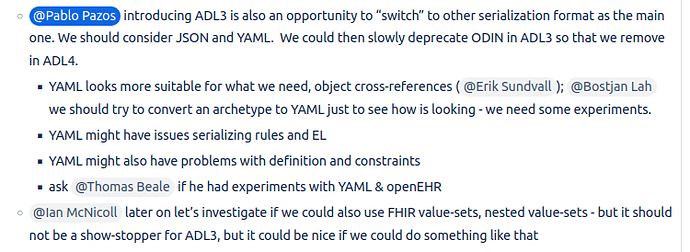

1: design artefacts: archetypes and templates will be in adl2.4 format and serialisation. File extensions will be .adls for differential archetype, .adlt for differential template (and let’s try to deprecate the flattened archetype .adl formalism) from adl3 on I’d like to change the serialisation of these artefacts to yaml, (file extensions to be decided; we can consider json, but I’m not in favour of including it in the specs).

2: operational artefacts: a flattened format as a use case specific dataset format, this will be mainly used at the the api level imho. So probably openapi format makes sense (with json and/or yaml serialisations, I don’t care). This should ‘replace’ the Better specific web template, and the openEHR TDD, SDT, etc. imho. And if needed (probably useful for validation and less implementation/language dependency) we can retain the .opt2 in OpenAPI ADL yaml, that still contains AT codes instead of (English) language key’s. So unrecommended for use by client app developers.

There’s probably a lot of details to fill in, and probably some major controversies, so let’s except a bit of chaos in this topic. Very curious for your thoughts @SEC @SEC-experts

Edit: the current formats are described in Simplified Data Template (SDT)

Please keep discussions on how to achieve/generate/validate the formalism on other topics, like this one JSON Schema and OpenAPI: current state, and how to progress.

I’d like to focus this to topic on discussing a desired scenario and how to simplify and standardise the currently available openEHR formalism. Since the amount and complexity is a barrier of entry to openEHR.