Just to make all the people who do serious modelling of actual questionnaires crazy, I’d like to a raise a philosophical question: should we model questionnaires as literal tree structures isomorphic to the implied tree structure of the forms they are usually expressed as? Or should we attempt to represent every question / option / field using some existing archetype data point? Let’s call this the literal versus semantic approach.

NB: When I say ‘we’, I of course mean ‘you’, the clinical modellers

But the question is a serious one and there are data processing, querying and semantics angles that I think are important.

I’ve been taking a good look at the JAMAR (JUVENILE ARTHRITIS MULTIDIMENSIONAL ASSESSMENT REPORT (JAMAR)) questionnaire, among others.

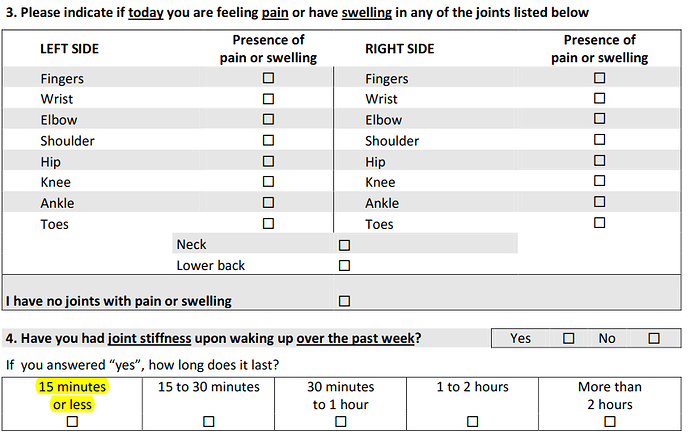

The first thing one realises when trying to do the modelling in a semantic way is that the match for items in CKM is often fairly approximate semantically as well as structurally. For example these parts:

could in theory be represented by data points in the various signs/symptoms CLUSTER archetypes, arranged within OBSERVATIONs. Or maybe pain archetypes. But it’s not a very close match. Other questions, or question sets are clearly an artefact of the tool design, i.e. they follow some theory of experts who have worked out what questions reveal most diagnostic or disease state information.

My initial conclusion is that we should model questionnaires in a literal fashion, i.e. without trying to represent data points with any proper ‘semantic’ archetypes. I think we should do this because:

- it’s the only way to be faithful to the questionnaire, and

- there’s no way to know if the true intention of any item in the questionnaire really is the same semantically as some previously defined openEHR archetype, except maybe for a few things like pain scale.

If this approach were used, implications would seem to include:

- every questionnaire is its own thing (no / little reuse)

- since there’s no use of ‘standard’ archetype data points, queries for say ‘joint stiffness’ based on some standard archetype are not going to find any ‘joint stiffness’ answers in recorded questionnaires.

Initially the above seems sub-optimal, but if we look a bit harder at the problem, we would potentially try to:

- create a growing library of ‘standard question archetypes’ to cover questions that recur across questionnaires (there are a number of EMR related products that work on this basis)

- start to design questionnaires more based on this standard library rather than each one being a new invention

- find a way to link such questions to what we think are the relevant data points in the main clinical archetypes (perhaps by terminology)

One problem with the idea of a ‘standard questionnaire library’ approach is of course that questionnaires that come into use tend to come from all over the world, from research groups, specific institutions, PhD authors, and so on, i.e. there’s little common culture (at least that I am aware of). Whether there is any future in such a library seems orthogonal to me, to the original proposition - that we should model questionnaires using a mostly literal approach.

A fair bit of this would seem to apply to scores and scales as well - they are all tools of some kind, designed to make it efficient to triage or classify patients in a way so as to determine an appropriate care pathway, or classify as at-risk etc.

Anyway, the purpose of posting here is to run the idea past people who know a lot more about clinical questionnaires than I do, and see if any of the above resonates. There might be practical conclusions, such as having a dedicated questionnaire and/or question RM types to make direct representation easier.

Again, zero interop value, but at least they get some consistency re metadata, I guess.

Again, zero interop value, but at least they get some consistency re metadata, I guess.