Great question Hugo.

We struggled quite lot with this in the early days of openEHR , as we came at the issue with openEHR’s prime function to support the primary ‘frontline’ recording of patient records, and in truth, with a bit of a bias towards doctor’s ways of recording.

In that world, we generally do not record negatives like ‘No diabetes’ as part of, for instance, a Problem list. We only record the positive diagnoses, other than occasional statements of Exclusion like ‘No evidence of diabetes’ after some investigations. But this is about a ‘ruled-out’ diagnosis, and even then woulds not appear on a problem list.

However, Yes/No questions do appear in front-line records, especially in nursing records or ‘clerking’ sheets by junior docs or protocol driven procedures.

In these cases the statement Diabetes - Yes is generally not ‘diagnostic’ , in the sense of a patient being suddenly being given that Diagnosis for the first time. It is about information gathering and sometimes safety checking, where often the person recording the information is not trained or empowered to ‘make a diagnosis’. It also not where/ how would expect decision support to be triggered. If a nursing admission document said Diabetes: yes, and there was no Problem/Diagnosis: Diabetes mellitus type 2 entry in the patient’s problem list, I would expect hat to be reviewed by a more senior staff member and entered.

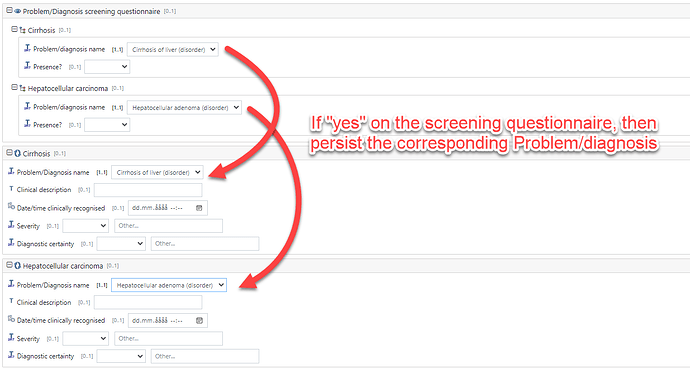

So that is why after battling for some time to try to make these 2 modes of recording seamless, we recognised that they are actually quite different and do need to be represented differently and importantly not confused when querying . So we have the Problem/diagnosis archetype and an equivalent Screening Yes/No archetype.

Registries generally use the screening pattern because historically they have been disconnected from any source EHR data, so the information has to be curated from multiple sources mostly paper. Because the registry has a specific focus, the explicit negation is important as it tells the research community definitively (at the point of registry update) that the person completing that record could not find any evidence of Diabetes. So there is curation and protocol adherence aspect.

A further issue is that registry type questions often ‘munge’ diagnoses e.g. Angina/ MI or other heart disease Y/N

, or that they ask for further detailed info in response to a Yes e.g a specific Cancer grade.

So there is a gap between primary record and registry which right now is annoying

Can you give an example?

Ultimately, this problem is fixable once the source system data is fully available and queryable, and Registries start asking for positive problems only . In many respects UK GP systems work like this for reporting purposes.

Right now, I would model the Registry using Questionnaires but use AQL on the Problem/Diagnosis to pre-fill/suggest the responses. However you cannot use Exclusions to pre-populate as statement like ‘no evidence of diabetes’ is only true at the time it was made. The patient might develop diabetes the following week.

@siljelb @heather.leslie and @bna may have other views?

Phew - glad I got that ‘off my chest’